Hello, I’m Anar Amirli, a Machine Learning Engineer with an MSc from Saarland University. I currently work at an AI safety research incubator in Saarland, where I focus on detecting and mitigating social biases in large language models. Previously, I worked at the German Research Center for AI (DFKI), contributing to Explainable AI projects in medical imaging, anomaly detection and generative modelling. My work combines model design, evaluation and deployment to build transparent and reliable ML systems.

My broader interest in AI safety and governance is shaped by my interest in biopolitics, structuralism, and ethics. I’m particularly interested in how policy frameworks and technical research can meaningfully contribute to safer deployments of large models. I’m open to collaborating with interdisciplinary teams on safety audits, model evaluation pipelines and governance-driven research that connects technical metrics to regulatory requirements.

In my spare time, I enjoy reading and playing sports, especially dancing. I also like spending time with friends, mixing vinyl and doing pottery.

Short CV

- 2025 - now Research Fellow, AI Safety Saarland, Germany

- 2025 Master’s in Computer Science, Saarland University, Germany

- 2021–2025 Research Assistant in AI, DFKI, Germany

- 2021–2022 Junior Applied Scientist in AI, NTU Singapore, remote

- 2019 Bachelor’s in Computer Engineering, Baku Engineering University, Azerbaijan

- 2019 Machine Learning Intern, ATL Tech, Azerbaijan

- 2018 Data Science Intern, Middle East Technical University, Turkey

- 2017-2018 Software Developer, Nspsolutions LLC, Azerbaijan

Industrial Training & Certifications

- ongoing Agentic AI with LangChain and LangGraph (Coursera)

- ongoing MLOps Bootcamp: End-to-End ML Project Development (Udemy)

- 2025 Developing Machine Learning Solutions – AWS (Coursera)

- 2025 Generative AI with Large Language Models (Coursera)

Selected Projects

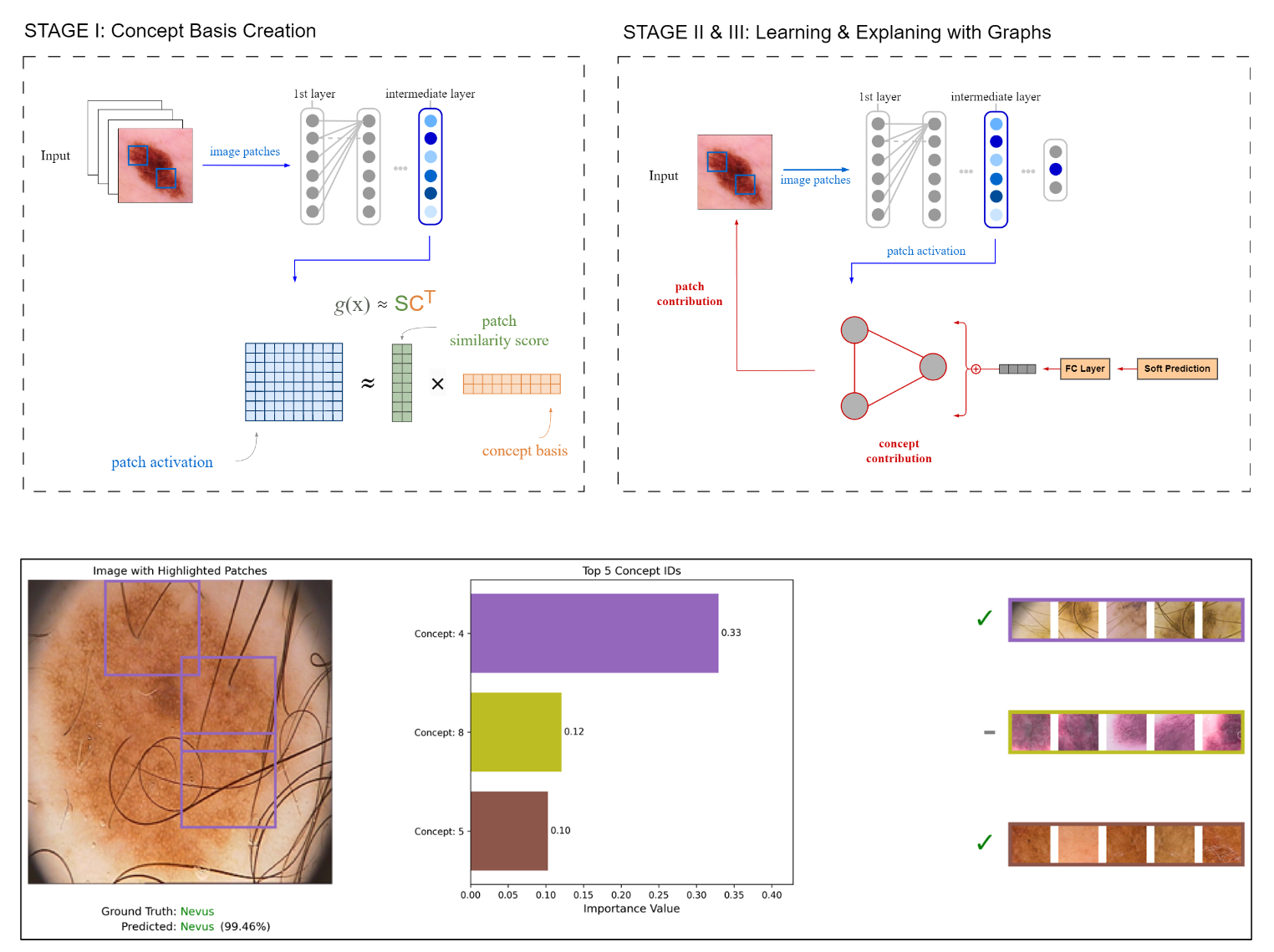

Explaining how black-box models make decisions is crucial for building trustworthy AI systems, especially in high-stakes domains like healthcare. Traditional attribution methods highlight where a model attends but not what it recognises. Concept-based methods address this by linking predictions to human-interpretable concepts. This work introduces an ante-hoc explainability framework that combines non-negative matrix factorisation (NMF) for unsupervised concept discovery with Graph Attention Networks (GATs) to model relationships between concepts, with a focus on medical imaging (i.e., skin cancer diagnosis).

Read more

While concept bottleneck models (CBMs) offer promise, they suffer from key limitations: the difficulty of defining clinically meaningful concepts, the high cost of annotations, reliance on heatmaps for localization, and potentially spurious alignment between visual features and textual labels. To avoid these pitfalls and leverage the strengths of vision models, we focus exclusively on visually grounded concepts. However, prior visually grounded methods often produce only global, class-specific explanations, neglect concept interactions, and provide unstable interpretability due to post-hoc nature.

Our framework addresses these issues by (a) discovering visual concepts with NMF, and (b) constructing concept graphs that capture interactions through a shallow GAT, balancing expressiveness and interpretability. Although our models do not outperform heavily optimised task-specific CBMs, they demonstrate consistent generalisation across medical and standard datasets and, in some cases, surpass baseline CBMs, showcasing a more faithful, visually grounded alternative for explainability.

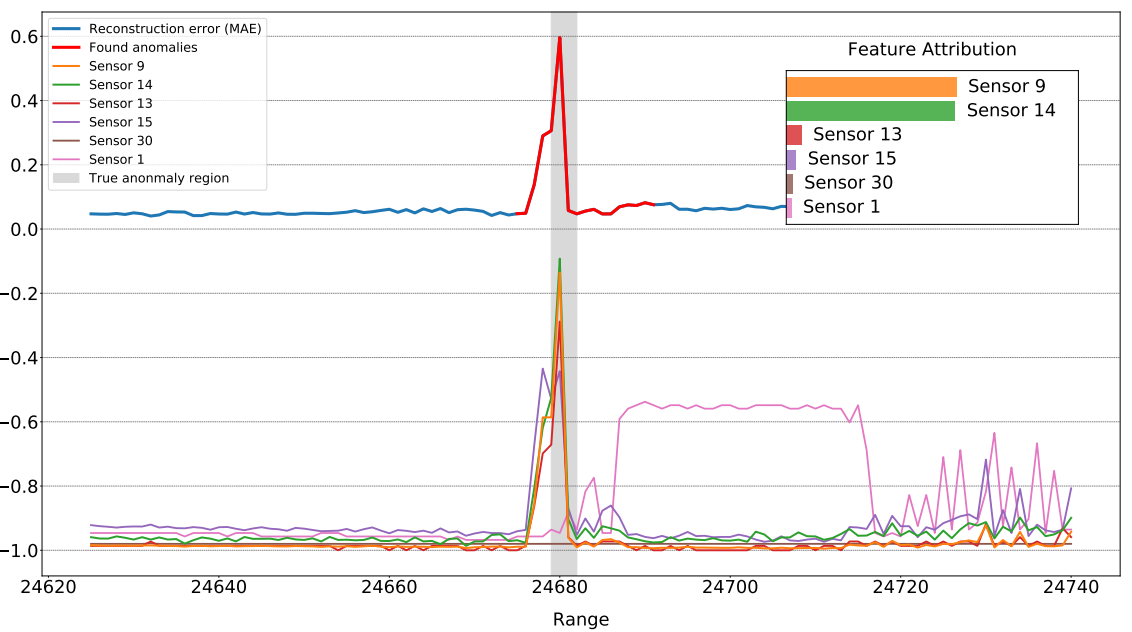

Real-time anomaly detection is critical in industrial settings to maintain quality and prevent costly failures. In this work, we study multivariate time series data from glass production and compare unsupervised detection and localisation methods. Our two-level approach detects anomalies, categorises their types, and localises faulty sensors with the help of explainable AI techniques.

Read more

Experiments showed that combining statistical pattern recognition with multivariate anomaly detection pipelines significantly improves both accuracy and interpretability. By categorising anomalies into distinct classes and highlighting faulty sensors, our method provides actionable insights for engineers monitoring production. This pipeline achieved promising results and demonstrates the value of integrating explainability into anomaly detection systems.

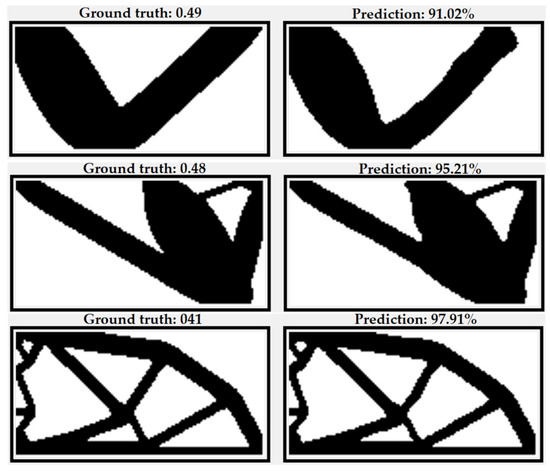

This project reframes topology optimisation as a multimodal-to-image translation task. We apply generative AI models, including GANs and diffusion models, to translate structured inputs into optimised 2D and 3D designs. The models consistently generate valid, high-quality structures at reduced computational cost.

Read more

Traditional topology optimisation relies on iterative solvers and finite element methods, which are computationally expensive and time-consuming. By leveraging modern generative approaches, we accelerate the design process while preserving structural accuracy. Notably, performance improved at higher iteration levels, underscoring the potential of generative AI to reshape how engineers approach design optimisation across multiple modalities.

We proposed a machine learning–based method to predict the ball location in football when it is occluded, using only players’ spatial information from optical tracking data. Trained on 300 matches of the Turkish Super League, our neural network models achieved strong predictive accuracy (R² ≈ 79% for the x-axis and 92% for the y-axis). This approach can complement vision-based tracking systems and enable more reliable tracking in football.

Get in touch

I’m currently open to collaboration, internships, and applied research roles in AI, explainability, healthcare, and AI safety. If you’d like to learn more about my work, feel free to get in touch. I’d be happy to hear from you!